One of the responsibilities of product managers is to identify the most important projects to work on. Prioritizing is a necessary component of this task.

Having limited resources and too many features can be daunting. Choosing which features to work on and at what point in the process is completely essential to prevent delays and a poorly implemented product.

This is where a prioritization framework comes in. A good scoring system will help you consider all of a project idea's fundamental elements with discipline and combine them in a methodical and logical way.

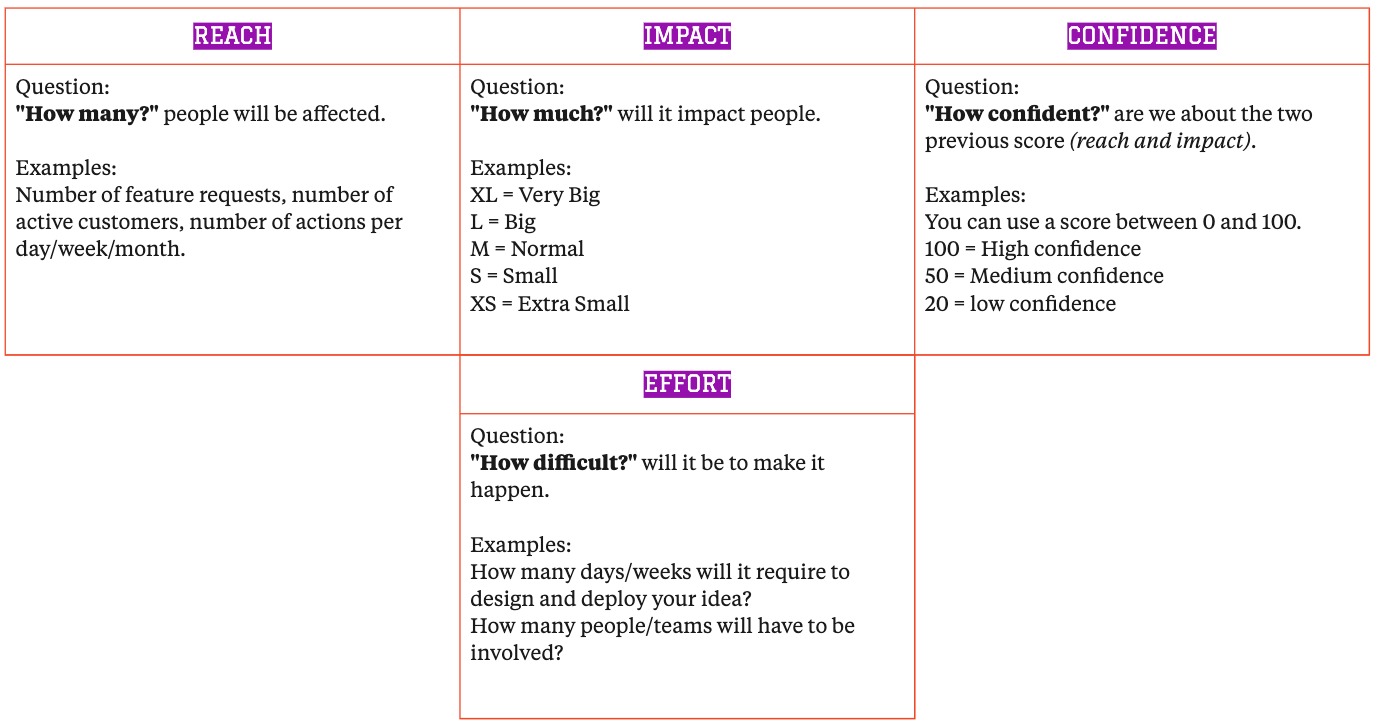

Reach – This determines how many people your initiative will reach in a given timeframe. Your specific initiative will determine the context of the reach. From there, determine how long you will monitor this reach and give your “reach” a total score. For example, we expect 1000 people to visit the site in 30 days.

Impact – The number of people who take action on your initiative during the scheduled time frame. For example, from the 1000 people that traffic the site in 30 days, we are expecting 30 people to buy a product. The 30 people are the total measure of impact.

Intercom’s five-tier scoring system is helpful in measuring expected impact:

3 = massive impact (XL)

2 = high impact (L)

1 = medium impact (M)

.5 = low impact (S)

.25 = minimal impact (XS)

Confidence – Your confidence score is a balanced measurement of the data you have to back up your potential Reach and Impact scores combined with your sense and intuition. For example, your analytics are showing that your traffic based on similar products should reach approximately 1000 people in 30 days, and your gut tells you that your new product will get about 30 new buyers.

Intercom also created a scoring method for confidence scores.

100% = high confidence

80% = medium confidence

50% = low confidence

Effort – So far we’ve covered reach, impact, and confidence which all reference a benefit to your product or initiative. Effort, however, represents the cost of your initiative. For this reason, it takes a dominant role in determining your overall RICE score. A RICE score is essentially a simplified version of doing a cost-benefit analysis for your initiative or product. To determine your “effort” score you want to take a look at how many people it will take to complete each task and how much time it will take those people. For example, it will take 5 people, 6 months to complete this project. This clarifies and helps to determine your cost as well as your score. Use the same scoring system that was created for Reach.

3 = massive impact

2 = high impact

1 = medium impact

.5 = low impact

.25 = minimal impact

Creating a score with RICE

To create your overall RICE score multiply all of your benefits and then divide your costs.

Reach X Impact X Confidence (divided by) Effort = Total Score

For example, average Reach (3) X Average Impact (3) X High Confidence (100%) (5) (divided by) Low Effort (2) = 22.5In order to better understand how to calculate the parameters and then finally implement this prioritization framework in your company, let's first take a quick look at how the different elements work and the questions they answer:

Should Product Managers use RICE?

Your strategy and vision have to be set way before you decide to prioritize your roadmap. This is one of the important principles you need to know to build a Product Roadmap.

As a recurring team exercise (monthly or quarterly), the RICE methodology encourages your team to think about the problem they are solving from a specific perspective and with a number of advantages.

Metric definition: Thinking about your product improvements with the RICE framework in mind will help your team redefine what metric matters when it comes to success.

Data-driven decision: To utilize the RICE scoring model, you need to consider clear data points such as the number of requests or an overall number of users affected by a feature, removing emotions and opinions from the prioritization debates.

Flexible Framework: You can easily adapt the model to your reality. Use it to prioritize ideas, features, and initiatives.

Favor alignment: Because the score covers critical variables, everyone can understand how it will help you align everyone on your roadmap, internally and externally.

Factors to consider before using the RICE Scoring Model

Before using the RICE prioritization framework, there are a few things you need to consider:

1. RICE is not a scientific method.

The decision to focus on a lower-scoring initiative or to discard a feature with a higher score could be influenced by various factors, such as the dependencies between two projects.

2. There are various ways to calculate each element of the RICE method.

It's important to make sure RICE aligns with your company's strategy and methodologies before you implement it and keep the first implementation steps simple to drive adoption.

3. Start by asking the right questions

How to quantify your REACH?

How to qualify your IMPACT?

How to assess your CONFIDENCE level?

How to estimate your EFFORTS?

Now that you have a better understanding of how the RICE system works, it's time to examine it in more depth.

RICE as a framework for assessing priorities

Quantify your REACH

An excellent way to think about this criterion is by tracking how many requests there are for a particular feature or how many customers will be affected over time.

It could be as simple as “How many users requested this functionality?”, “How many customers will this project impact over the next quarter?”. You could also consider the number of affected “transactions” or “activities” per month/week.

It mostly depends on what product metrics or KPIs you decide to focus on. Check this article to find out which ones are the most relevant in your context.

What we recommend:

After using RICE internally, we recommend relying on the number of requests received through feedback, customer interviews, chat support, etc. to quantify your reach.

Qualify your IMPACT

Impact also depends on what metric matters most for your business and what exactly you want to influence while building something new or improving something that already exists.

For Intercom, it was “How much will this project increase conversion rate when a customer encounters it?” It could also be, “How much will this initiative increase the number of users needed within one account?” or “How much will this feature drive the adoption of higher pricing plans?”

When the answer is "dramatic", your initiative will weigh XL. However, when it is "less significant", it will weigh closer to XS.

What we recommend:

After using RICE internally, we recommend leveraging the combined value of the customers who requested a specific feature to qualify your impact.

Assess your CONFIDENCE level

It is easy to get excited about a new project as a result of your level of confidence, so addressing your level of confidence will compensate for a lack of information, skills, or resources for this project.

The confidence level is often given as a percentage, but you could consider it as a score of 50 for instance.

While calculating your confidence level, be honest with yourself:

Do you have metrics to back up your reach and impact research?

Can you continuously track them to measure evolutions?

Give your project a 100% chance or 50/50 score.

Are your customers asking for AI and you are still hiring the right resources to build it? Give your project a 70% chance or a quality score of 30/50.

You may have a hard time measuring a project's reach and impact compared to what you evaluate. Your initiative should not get more than a 50% chance or a 20 percent confidence score.

What we recommend:

After using RICE internally, we recommend measuring your confidence level by asking all of the main stakeholders to communicate their confidence scores over 100 points. Then, remove the highest and lowest scores and calculate your average confidence score.

Estimate your EFFORTS

Once we know how many people will be affected, how much will they impact, and how confident we are that all of this will occur, we can calculate the effort to find a path that will encounter less resistance.

There are a few functionalities that would have a high reach and impact with a low level of effort and a high level of confidence. This could be measured in terms of "people per month" or as "number of days/weeks" to develop.

As for the number of people per month, this will be more difficult to gauge since you have to do some math. Our advice based on our own experience is to use an approximate number of days involved to plan, develop, verify, and deploy the project you assess.

What we recommend:

In order to estimate your effort, we recommend that you first think about it in terms of days of development and ask your development team to get a close estimate. Once you have this estimate, you can take into consideration dependencies with other projects and the effort involved with those.

If the number of dependencies is over two, we recommend you to apply a factor 2 on your effort estimate to cover the dependency aspect, or simply add the effort estimate of the 2 dependencies as well, if they have been forecasted already.

Rice Effort Estimation Example :

Conclusion

Identifying the Reach and Effort of this equation are usually the most challenging parts of the equation. Work with your stakeholders to agree on how to assess Reach.

Work with your Developers to come up with a consistent way to score the effort of something without taking up too much time for the team (requirements come later, we just need to evaluate now if we should even push forward with the idea). Teams sometimes conclude a T-shirt sizing mechanism is best for this level of estimation (S, M, L, XL, etc...) and we would put ranges around those sizes. I don't recommend doing this for the Product Backlog, but for this level of estimating it should be fine.

The most important thing is to come up with an ideation or innovation process that you can use to prioritize the infinite list of ideas that come in and then pair that with the capacity of your team to determine what can be accomplished in reality with all of the current initiatives.